Seerist, Deloitte: Foreign Influence Risk Index (FIRI)

Product UX/UI Design Lead

Client: Seerist, Deloitte

In Collaboration with: Chief Technology Officer, Data Science Team, Dev Team, Deloitte Team

Acknowledgments

Seerist's Foreign Influence Risk Index (FIRI) achieved "Awardable" status through the Pentagon Chief Digital and Artificial Intelligence Office’s (CDAO) Tradewinds Solutions Marketplace. The Tradewinds Solutions Marketplace has recognized the immense potential of FIRI in capturing, tracking, and analyzing great power competition activities.

Context

Seerist, in collaboration with client Deloitte, wanted to launch a new product in the Security, Risk, Threat industry for users who needed to understand the Foreign Influence Risk Index (FIRI) of specific countries.

The product would offer users the ability to view a country’s influence globally and on specific countries. The types of influence to be measured were Political, Military, Economic, Social, Information, and Infrastructure (referred to as PMESII).

I conducted interviews with stakeholders weekly to gain feedback during the one year of development. All feedback was analyzed and implemented into the prototype for presentation at the next meeting.

User testing was also conducted to gain feedback that would create buy in from stakeholders for my usability concerns.

Initial Sketch

The initial concept for FIRI was to be a dashboard, but in order to accommodate all data and view types, this original concept was moved away from to show more information.

Challenges

First UX Project of My Career:

Challenge: FIRI was the first project UX project of my career, and I was the lead and only UX Product Designer. While users and stakeholders at both Seerist and Deloitte were happy with the shipped product, and in retrospective, there are aspects of design and usability that I would do differently now three years into my career.

Solution: I’ve provided conceptual reimagined wireframes that more closely adhere to UX standards, and would align more closely with recently created Seerist UI guidelines, which I believe is important for product consistency.

No UI Guidelines for Coloring:

Challenge: Brand colors for Seerist were provided by an outside marketing team about one month into this project. As such, there were no UI Guidelines for color use and colors. While there was no assignment for colors in the Seerist product anyway, I was concerned about design consistency between both Seerist and FIRI products, but the Chief Technology officer’s feedback was that FIRI users would not necessarily be Seerist users. While this was true to an extent, in User Testing I learned that there was some overlap of users between Seerist and FIRI.

Solution: I created a set of UI Guidelines for FIRI that focused on usability. For example, I used Seerist’s Lime Green to guide users in key user flows (navigation, buttons, etc).

Assigning Brand Colors for Map:

Challenge: Assigning brand colors for the Map proved to be a challenge, as I not only needed a range of five colors to convey meaning not only for the country coloring, but also for the article dots, and both these ranges needed adequate color contrast not only within each range, but also from each other.

Solution: Being early in my career, I made the effort to have enough contrast in not only the color range used for coloring the countries, but also to contrast these country colors from the article dots. This meant that I needed more colors than provided, so I consulted with the marketing team, who gave permission for me to add shade ranges for the tertiary brand colors. In retrospective, if I revisited this project today, I’d dive into the Accessibility of this map coloring.

Development in Streamlit:

Challenge: FIRI was developed in a separate tool “Streamlit” from Seerist. Streamlit was chosen by the Chief Technology Officer as it aligned with future product goals, but it also meant certain limitations with map and component function. The engineer wasn’t assigned until a few months into the project, so early wireframe designs needed to be iterated on to accommodate Streamlit limitations.

Solution: I worked closely with the engineer to understand the limitations of Streamlit and provide the best design solutions with usability possible within these limitations.

Low Fidelity Iteration

Low fidelity wireframes were created based on feedback received from weekly meeting interviews with stakeholders from Seerist and Deloitte.

Map View

Graph View

Data View with Implemented Early Stakeholder Interview Feedback

Early stakeholder interviews at Seerist led to implementation of design solutions such as coloring the “Change in Influence” column to draw the user’s attention on important changes in foreign influence risks.

Stakeholder Feedback

During weekly meeting interviews with stakeholders at both Seerist and Deloitte, I’d receive and implement feedback into the design to present an interactive prototype for the following week. Since there was a lot of feedback coming in, stakeholders at Seerist would filter this feedback by what was possible (or what was possible for MVP).

Some feedback during this iterative time:

Higher ups at Deloitte do not like the radar graph.

Graph feedback: what graphs are needed and which are not.

Data Analysts provided useful feedback specifically that only bar, line, and pie charts are useful.

Data needed further back than modals provided

Heatmap over time to compare two countries

Feature for personalized polygons of interest as Seerist

Click on country would cause zoom in

Ability to click on a point in the line graph to understand what event caused that spike or drop

Time filter options: day, week, month, year

Filter persistance across all three views (Map, Graph, Data):

Time filter would be useful for map articles and graphs (not data view)

Influence scores needed on Map

Ability to see which article is selected on Map

PMESII tags for article cards

Keeping Streamlit default filters for Graphs and Data table: it was felt by stakeholders that this default feature provided more to the user.

I was concerned users would be confused by these component features as they didn’t align with the intended use of our product, but I was unable to gain stakeholder buy in on removing these because it would take more time to override in code.

Color connotation for Influence scoring

Do not use Green as it conveys “good”

Do not use Red at it conveys “bad”

What are the color connotations for those assigned for PMESII?

I delved deeply into this during stakeholder and user interviews, with resulting feedback being that there was no issue and no suggestions on the colors I assigned for PMESII.

Exploration for Visually Conveying Data

Based on feedback from stakeholders at Deloitte and Seerist, I explored various design solutions for users to visualize data. Further feedback was provided from the Data Science team on graphs that the only relevant types of graphs for our data would be bar, line, and pie charts.

Graph Exploration

Data View Exploration

Mid Iterations

As the product began to be built, I worked closely with engineering to understand what limitations (coloring, component function) and challenges came up with developing in Streamlit.

Wireframe Design in Figma: Map View

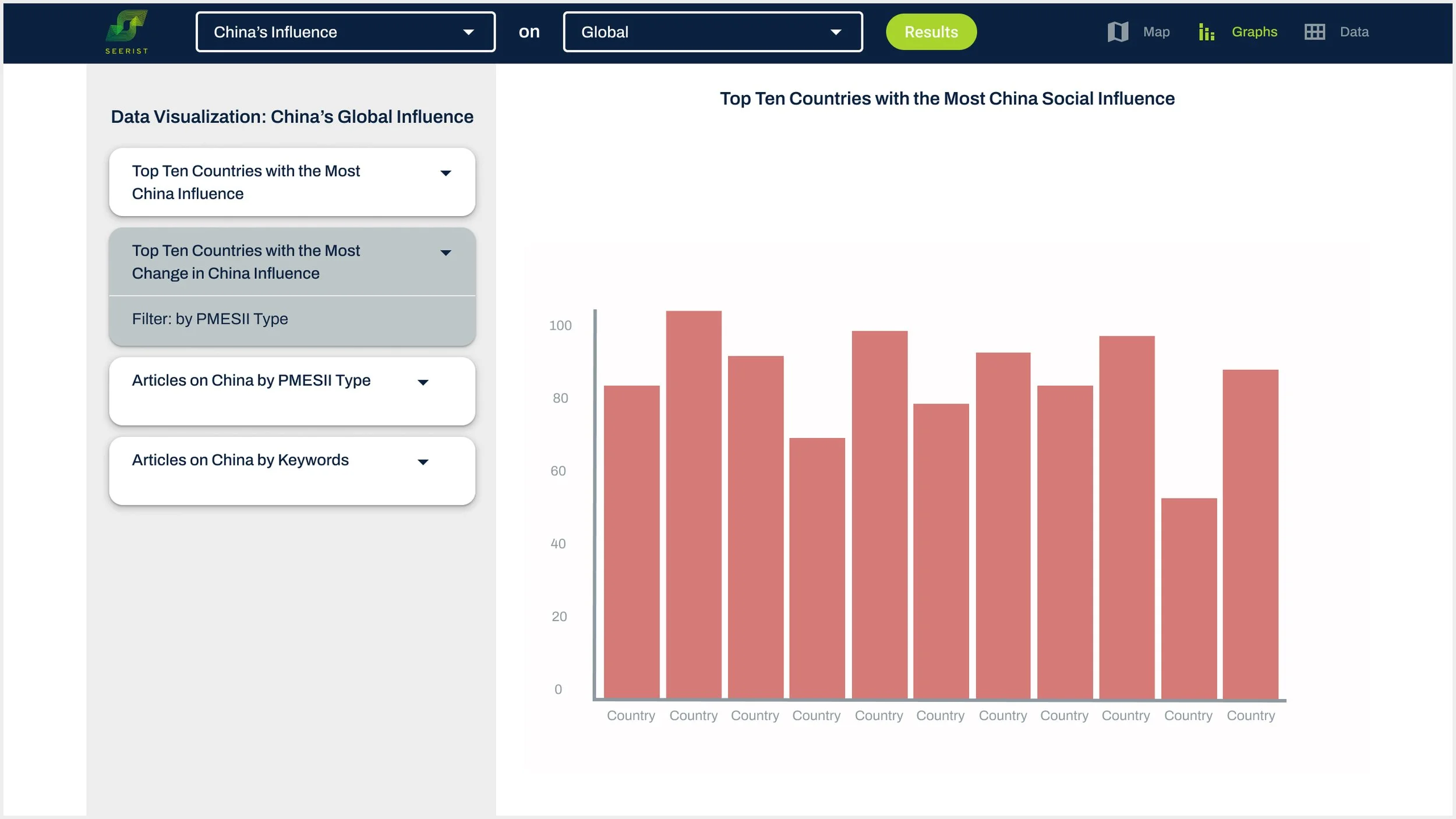

Wireframe Design in Figma: Graph View

Wireframe Design in Figma: Data View

Screenshot of FIRI in Streamlit: Map View

Screenshot of FIRI in Streamlit: Graph View

Streamlit had default component functions, which would later prove to cause user confusion. For example, the only way to list graph types (on the left) was with cards that would collapse when clicked on, so a Select button had to be added, but the collapse function could not be removed. There was also no way to shade the card to give feedback to the user that that graph was selected. Also, all filters had to be placed into each graph’s card, which caused user confusion as users thought the left panel was a list of filters for the one graph, rather than a list of graph options.

Screenshot of FIRI in Streamlit: Data View

Streamlit had default table and graph filters that didn’t add benefit to the user. I wanted to remove them as they didn’t help key user functions, but stakeholders wanted to keep them as they thought it just gave the user more options, and also it would be difficult to remove in code. During User Testing, users found these default filters confusing causing issues with completing work flows.

User Testing

Overview

I specifically interviewed users who were using the platform for the fist time because I wanted to understand the design usability from two aspects:

Intuitivity

Learnability

I tested three User Flows that were centered around key user workflows in which I had usability concerns.

The users were selected by Deloitte.

Ten users were interviewed and tested.

The six users with relevant backgrounds to the users were used for Affinity Mapping to track trends.

All User Testing Feedback was communicated in the weekly stakeholder meetings with implementation suggestions in order to collaborate on solutions across teams.

Script for User Testing

User Flow 1: “You want to know which countries China has the least influence. Using the Graphs, please find which countries China has the least Influence.”

User Flow 2: “You want to know which countries China has had a change in influence. Using the Data table, please find which countries China has had the most increase in Influence.”

User Flow 3: “You would like to know which country China has the most Political Influence on in the world. Please find which country this is, and what China’s Political Influence score is on it.”

Since I specifically tested on users who had never seen the product, in the first two questions, I tell them which section of the website I’d like for them to find the data, so I could understand if those two sections had usability issues. For the third question, I didn’t tell them where to find the data, to see how easily a first-time user could navigate the website.

Summary of Results

The product had low usability in regards to being intuitive for first-time users.

The product had high usability in regards to learnability as communicated by the first-time users.

User Feedback

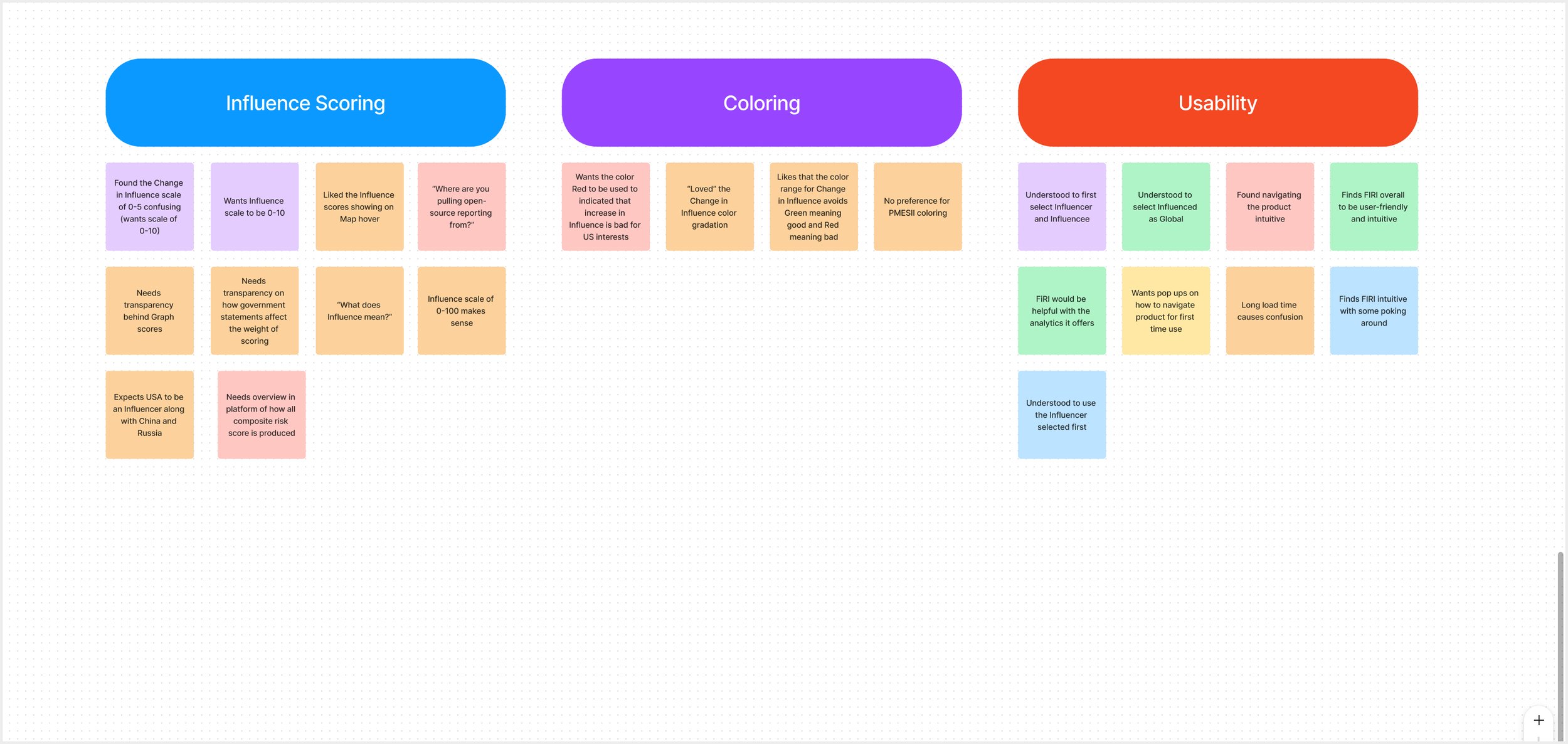

User feedback was placed onto sticky notes in Figma’s FigJam with each user being assigned a color.

Affinity Mapping

The sticky notes were then organized into categories to understand trends and determine product implications.

User Testing: Turning Research Trend Insights into Product Solutions

Map View

User expected interactions

Hover: Influence Scores

Click: various feedback from users on expectation (score transparency, zoom, and country data)

My concern for taking the user to Country Data was the confusion trend when taking the user from their current work. Possible solutions:

Open in new tab

Open pop-up

Split screen view: a couple users wanted a split screen to see Map and Data views at the same time (initial dashboard concept)

Graph View

Graph selection

Limitation within Streamlit on conveying to the user which Graph is selected (can’t manipulate card design). Also, there is no ability in Streamlit to collapse the Graph cards not in use when one is selected.

Suggested solution is to update the hierarchy of the graph titles on the cards to convey to users that there are multiple graphs, which was implemented.

Suggested solution is to add a Select button onto the cards, which was implemented.

Radar Graph: while many stakeholders didn’t like the Radar Graph, it was so popular with users, that it was the only graph constantly receiving praise. I used this information to gain buy in from stakeholders on keeping it, but to also add the variations users requested:

Over time

Country comparisons

Graph filter names assigned by the Data Science team caused a usability issue. I suggested filter naming conventions that adhered with outside world connotations, which was implemented.

Data View

Data manipulation options need to be more obvious: since there was usability issues for the users being able to understand how to find information due to confusion around Streamlit default filters and sorts within the Graphs and Data table, I was able to communicate this issue, and gain buy in from stakeholders that rather than providing more to the user, it actually caused confusion, and thus users were unable to accomplish user flow goals. I suggested we remove these default filters, which was implemented.

Data table sorting feature: with removing the Streamlit default sort and filter features, I suggested we add a column title highlight and arrow to not only indicate which column is being sorted, but the location for our sort feature. I retested this solution with other users, and they now easily understand how to sort the Data table.

Data: Country Select

Since this was my first work experience UX project, I struggled with intuitive navigation. Users intuitively wanted to be able to click on a country within the Global Data view, and be taken to that specific Country’s Data view, but were confused on how to return to the Global Data view. The solution provided to add an information icon into the top navigation, so users could access this without confusion on being taken to a different aspect of the site, proved to be a solution follow-up users were happy with.

Influence Scoring and PMESII Methodology

For transparency and weighting of Influence scores: the trend was that various users wanted access to this information on different views (Map, Graph, Data). For users who brought up this issue, I also asked them were they would expect to find this information to gain insight. I pulled the trending feedback, and suggested a design solution of adding an information icon into the top navigation bar, so users could access this information from any view (Map, Graph, Data), which was implemented. Follow-up users were now able to quickly find this information.

Users were confused by a Change in Influence scoring of 0-5, and unanimously preferred a scale of 0-10. This was implemented.

Coloring

Color connotation: I delved deeply into this during stakeholder and user interviews, with resulting feedback being that there was no issue and no suggestions on the colors I assigned for PMESII.

Usability

Work flow starting point: users were often confused thinking they had to start their workflow on the Map view because it was the landing screen. Users tend to feel like they need to start with the Map, which caused me to question which view should be the landing screen (and first view in top navigation order).

I noticed this was only a issue for first-time users upon initially using the product, and not an issue once they were ten minutes into using the product.

This information was presented to stakeholders, and it was decided not to make any changes to design.

Filter persistance across views (Map, Graph, Data)

Some users expected their filters to persist, either from one view to the next, or when returning to a specific view at least. This was the reason and intention for the initial conceptual wireframe for a dashboard.

This information was presented in collaboration and stakeholder meetings to understand Streamlit limitations and final stakeholder decision for MVP.

Future Influencer Countries were added as greyed out in dropdown to convey to users that more Influencer Countries were coming.

Mockups for Marketing

I worked closely with the marketing team to create mockups for marketing materials.

1. FIRI One Pager

2. FIRI: Page One

2. FIRI: Page Two

Retrospective

I didn’t have a position to advocate for User Testing, but I would have liked:

To have started with User Testing to inform initial design solutions, such as the user’s view and navigation needs.

To have incorporated User Testing more frequently into the iterative design process.

As this was my first project in my career as a UX/UI Product Designer, I’m not happy with the wireframe design. Revisiting this project today, I’d redesign the wireframes.

I’d adhere more closely to UX standards.

I’d align the design more closely with the recently created Seerist UI guidelines for design consistency across products.

Data View

I wouldn’t use a dark background for the Data View, which I didn’t have a usability reason for.

I would have kept design consistency with a light background.

Accessibility: I would check the accessibility of the Map coloring.

Map View

Data View